Roman Strand, Senior Manager Master Data + Data Exchange at GS1 Germany, on the success and future of EANCOM® and the GS1 application recommendations based on EANCOM®.

Roman Strand has been working for GS1 Germany for more than 20 years and is, among other things, the head of the national EDI/eCommerce specialist group. In this interview, he explains the role of the EANCOM® standard and why the GS1 specification with the associated application recommendations will continue to determine the business of mass data exchange in the future.

Hello Mr Strand, you have been working for GS1 Germany for over 20 years. What were the major focal points of your work during this period?

I work all the time at GS1 in the national and international standardisation department. In the early years I was the apprentice under Norbert Horst, who helped develop the EANCOM® standard in Germany. During this time I learned a lot and also started working with GEFEG.FX. I have remained loyal to this department and the topic of national and international standardisation to this day. In various committees, I drive the further development of our standards together with our partners from the business community. In addition, I work as a trainer and conduct EDI training courses in which our customers are trained to become certified EDI managers, among other things.

Which topics did you deal with a lot last year?

Next to the further development and maintenance of our EANCOM® and XML standards, we deal with the current digitalisation topics and check to what extent new innovations could be relevant for our work at GS1. Furthermore, we had our big anniversary celebration last year, because EANCOM® is now more than 30 years on the market and our application recommendations have been around for more than 20 years.

Why was the EANCOM® standard developed and what function does it fulfil?

The EANCOM® standard was developed before my time at GS1. There is the mother standard EDIFACT, which is much too big and complex. The great achievement of the EANCOM® standard is to reduce this complexity of the mother standard to those elements that are important for our customers. Approximately 220 EDIFACT messages became 50 EANCOM® messages, which were then further adapted to industry-specific EANCOM® application recommendations. The leaner a standard is, the more economically and efficiently it can be implemented. This simplification made the widespread use of the standard by many companies possible in the first place. We also translated the English-language standard almost completely into German. This was another great simplification for the German community.

How were you personally involved in the development of the EANCOM® standard?

The development of the EANCOM® standard is mainly driven by our customers from trade, industry and other sectors. They pass on their requirements to GS1, which are then processed in the EDI/eCommerce specialist group. The decisions of the expert group are then implemented by me, among others, as a representative of GS1.

How can I imagine the role of GS1 in this process?

There are many published standards on the market for electronic data exchange between companies. But behind very few of them is a reliable organisation that is continuously committed to the further development of its standard. With us, clients can be confident that implementing the standard is a future-proof investment. If, for example, there is a legal change that also has to be taken into account in the standard, we adapt the standard.

Furthermore, we are responsible for the documentation and specification of the EANCOM® standard. Again, our focus is on simplification. Among other things, we ensure that as many codes as possible are used from code lists instead of free-text fields. Because with free-text fields, automated data processing is often associated with errors.

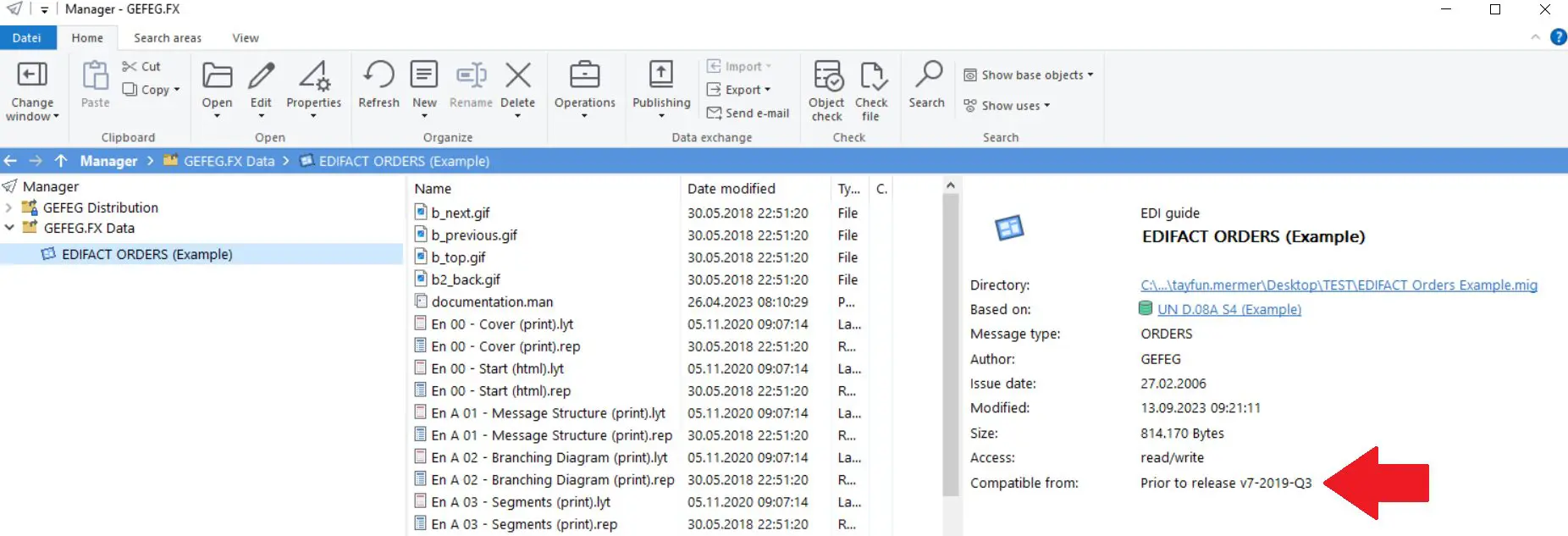

You use GEFEG.FX for data modelling and documentation of the EANCOM® standard. For what reasons do you rely on the software for these work steps?

I have been working with GEFEG.FX for many years now and it took me a while before I could really use the software to its full extent. In the foreground you have your specification and in the background you have the standard, which is linked to the corresponding code lists. This means that as a user, when developing my own specification, I cannot do anything in the foreground that is not already stored in the underlying standard. As soon as there is a deviation from the standard, GEFEG.FX reports an error message and ensures that there is no erroneous deviation. For me, this control function is the main advantage of GEFEG.FX as a standard tool. Otherwise, a comma could always be quickly forgotten or some other small syntactical error overlooked.

With the standard running in the background, validations or checks can be carried out conveniently. In addition, documentation can be created quickly at the touch of a button using the various output options. Thanks to these functions, you don’t have to start all over again and save a lot of time in many work steps.

How do you assess the future development of the EANCOM® standard?

For me, EANCOM® is Classic EDI, which is considered old-fashioned by many workers in innovative companies. However, in my opinion, this classic EDI offers many advantages. It is a defined structure that works in the mass data exchange business and will continue to work in the future. I once said to my colleague who has been working in EDI at GS1 as long as I have: “Until we retire, we don’t have to worry about EANCOM® being shut down.”

Because the business is still going and the demand remains high. There have been and continue to be new technologies that are supposed to replace classic EDI. When I started at GS1, there was a lot of hype about XML. The same happened years later with blockchain technology and today with APIs. All three technologies were seen as replacements for classic EDI, but in the end they are all just additions that offer supporting possibilities in the EDI area. Mass data exchange will continue to be regulated by classic EDI and therefore I assume that the future of the EANCOM® standard is also secured.

Are there any challenges or difficulties that need to be considered in the further development of the standard?

The problem of a global standard is its complexity. Over the years, new information has been added to the standard. For example, every relevant change in the law led to new additions without anything ever being deleted, even if no one has used it for 20 years.

We should therefore work more towards lean EANCOM® standards, in which only the information that is absolutely necessary is stored. After all, this reduction of complexity is one of the central strengths of GS1 standards. We achieve this above all by developing application recommendations in which the underlying standard is specified even further for a specific application. This leads to less information needed and fewer potential sources of error.

We are nearing the end of our conversation. Is there anything else important beyond the EANCOM® standard that you would like to talk about?

Yes, we are currently working on a semantic data model and are thus building a new content basis that contains all relevant information that is to be exchanged electronically. GEFEG is also involved in this development process. With the data model, our customers have the possibility to freely decide which syntax form they use for their data formats for electronic data exchange This fundamental work will therefore help users to be more independent of a specific syntax in the future and to be able to decide freely whether an XML, EANCOM® or even an API should be used for data exchange.

Mr Strand, thank you for this interview.